Performance testing is a crucial element in software development, revolving around evaluating and validating efficiency, speed, scalability, stability, and responsiveness of a software application under a variety of workload conditions. Conducted in a controlled environment, performance testing is designed to simulate real-world load scenarios to anticipate application behavior and responsiveness in terms of cyber traffic or user actions.

What's a standard deviation?

Standard deviation is a commonly used statistical measure that is used to assess the variability or spread of a set of data points. It is a measure of how much the data deviates from the mean or average value. It provides valuable insights into the consistency and reliability of a given metric, which can be useful in spotting potential performance bottlenecks. A low standard deviation indicates that the data is tightly clustered around the mean, while a high standard deviation indicates that the data is spread out over a wider range.

Importance of Standard Deviation in Performance Testing

The role of standard deviation in performance testing is profound. It provides an objective measure of the variations in system performance, thus highlighting the stability of the software application. A higher standard deviation indicates a high variation in the performance results and could be symptomatic of inherent problems within the software, while a lower or consistent standard deviation reflects well on system stability.

Thus, the inclusion of standard deviation in performance testing is not just informative but also crucial for a focused and efficient optimization of system performance. It serves as a compass for test engineers, guiding their efforts towards areas that show significant deviations and require improvements. This makes the power of Standard Deviation indispensable when conducting performance testing.

Practical Examples of Standard Deviation in Performance Testing

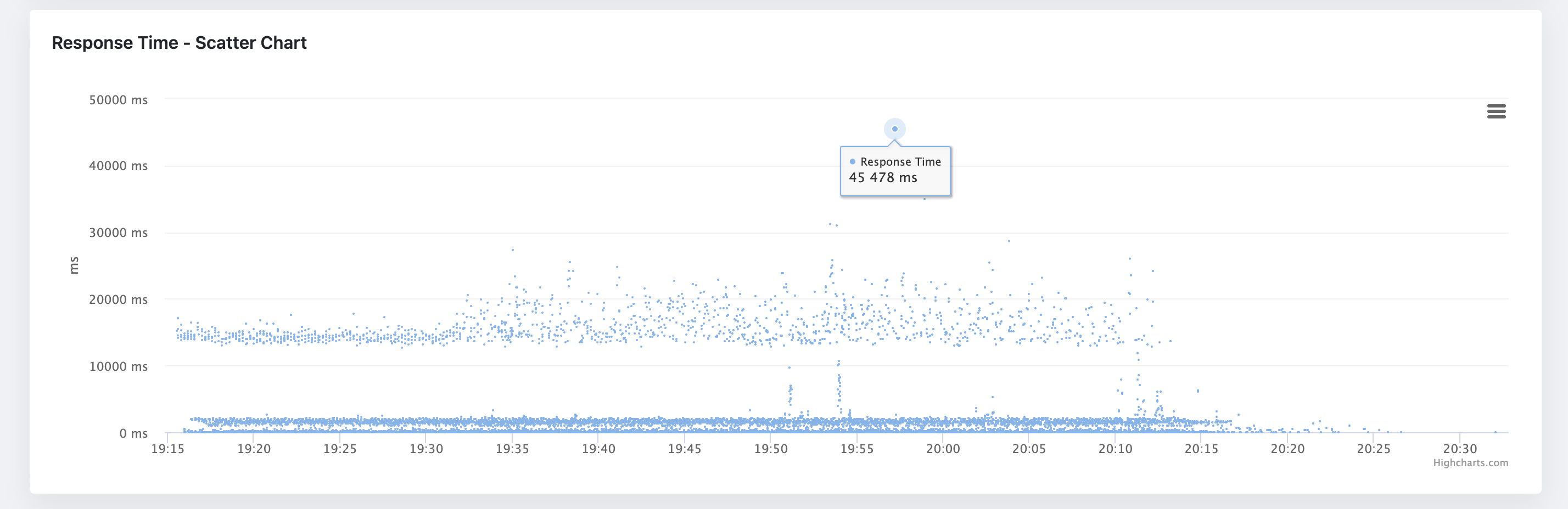

For instance, if the software's response time observations have a lower standard deviation, it conveys consistency in the response times under variable loads. If there is a higher standard deviation, as a tester, you would need to delve further into performance analysis, pinpointing the potential bottlenecks. It essentially acts as a roadmap, directing you towards the performance-related fixes required to achieve an optimal-performing website or application. The standard deviation represents the data and its distribution pattern. If the standard deviation is greater than half of its mean, it most likely means that the data is not formed in a normal distribution pattern. The closer the data is to the normal distribution pattern (bell curve), the higher the changes that the measured data do not include any suspect behavior.

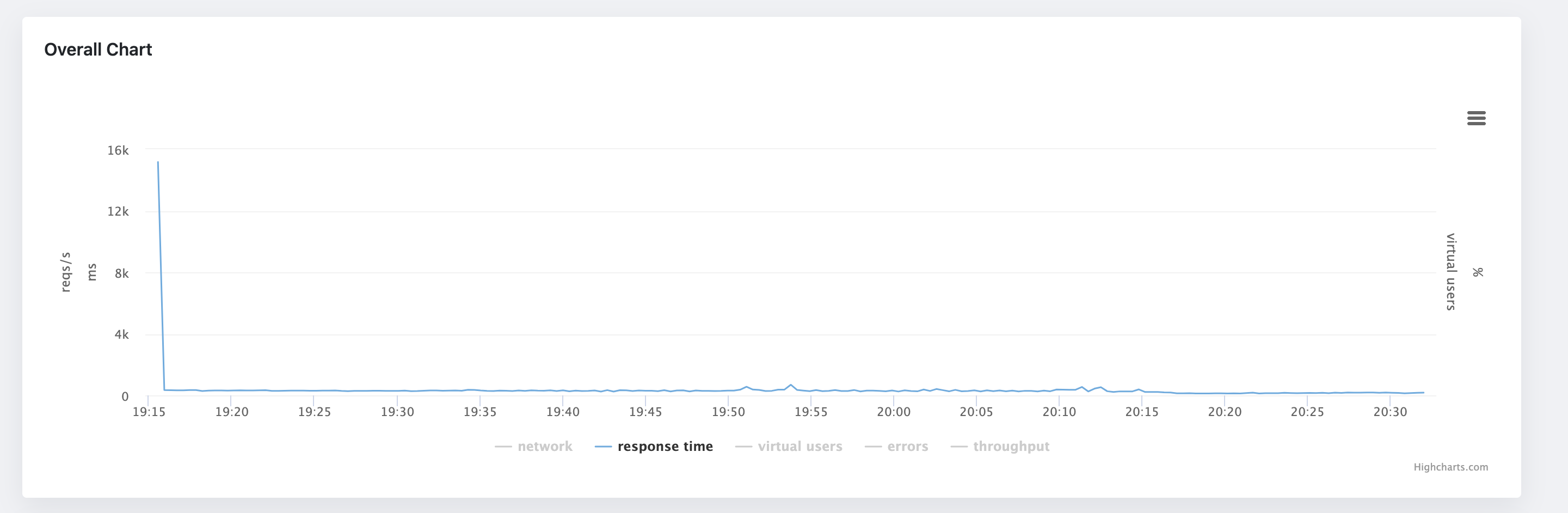

Incorporating Standard Deviation in Performance Testing Reports through JTL Reporter

In this digital era, leveraging the power of analytical tools to assess software performance has become essential. JTL Reporter is such a captivating platform that aids in recording, analyzing, and sharing the results of performance tests. This platform effectively integrates standard deviation measurement in performance testing, offering a holistic overview of system performance and stability and, thereby, proving invaluable in making informed testing decisions.