As technology continues to advance, organizations are becoming increasingly reliant on computer systems to support their operations. To ensure that these systems are performing optimally, it is essential to measure their performance. A good performance test report will contain many metrics, but they can be a bit difficult to understand for non-professional performance testers. That’s when the APDEX (Application Performance Index) metric can become very useful as it is a simple and easy-to-understand metric that helps organizations understand how well their systems are performing and identify areas for improvement. In this blog post, we will explore what the APDEX metric is, how it works, and its advantages and disadvantages.

What is the APDEX Metric?

The APDEX metric is a standardized way of measuring the performance of computer systems. It is based on the response time of a system, which is the amount of time it takes for a system to respond to a user request. The APDEX metric is calculated using a formula that takes into account the number of satisfactory, tolerable, and unsatisfactory responses.

How Does it Work?

To calculate the APDEX score, organizations need to set two thresholds for response time. The first threshold is the satisfactory threshold and the second is the tolerable threshold. They are set based on the specific requirements of the system. Once the thresholds are decided, the APDEX formula is applied to the response time data. The APDEX formula is as follows: APDEX = (Satisfied Count + (Tolerated Count/2)) / Total Count

Where:

- Satisfied Count is the number of responses that fall within the satisfactory threshold

- Tolerated Count is the number of responses that fall between satisfactory and tolerating thresholds

- Total Count is the total number of responses

The resulting score ranges from 0 to 1, with 1 being the best possible score. Based on the APDEX score the application’s performance is assessed:

- Excellent, 1-0.94

- Good, 0.93-0.85

- Fair, 0.84-0.70

- Poor, 0.69-0.50

- Unacceptable, below 0.50

Advantages of the APDEX Metric

The APDEX metric has several advantages that make it a popular choice for application performance measurement. Some of these advantages include: Simplicity: The APDEX metric is easy to understand and use, making it accessible to organizations of all sizes and technical abilities. Standardization: The APDEX metric is a standardized tool, which makes it easy to compare the performance of different systems. Flexibility: The threshold for response time can be adjusted to suit the specific requirements of the system, which means that the APDEX metric can be applied to a wide range of systems.

Disadvantages of the APDEX Metric

Despite its popularity, the APDEX metric has also disadvantages that must be taken into account. Some of these disadvantages include: Lack of Variability: The APDEX metric does not account for the variability of performance over time, which means that it may not capture performance issues that occur at specific times or under certain conditions. Limited Scope: The APDEX metric is only based on the response time of a system, which is not the only factor that contributes to the user experience. Factors such as the availability of the system, reliability of the system, and functionality of the system also play a significant role in determining the user experience.

Conclusion

The APDEX metric can be a useful tool for measuring the performance of computer systems. It is easy to use, standardized, and flexible. However, it also has some limitations that must be considered before using it. Organizations should use a combination of metrics to get a more complete picture of system performance.

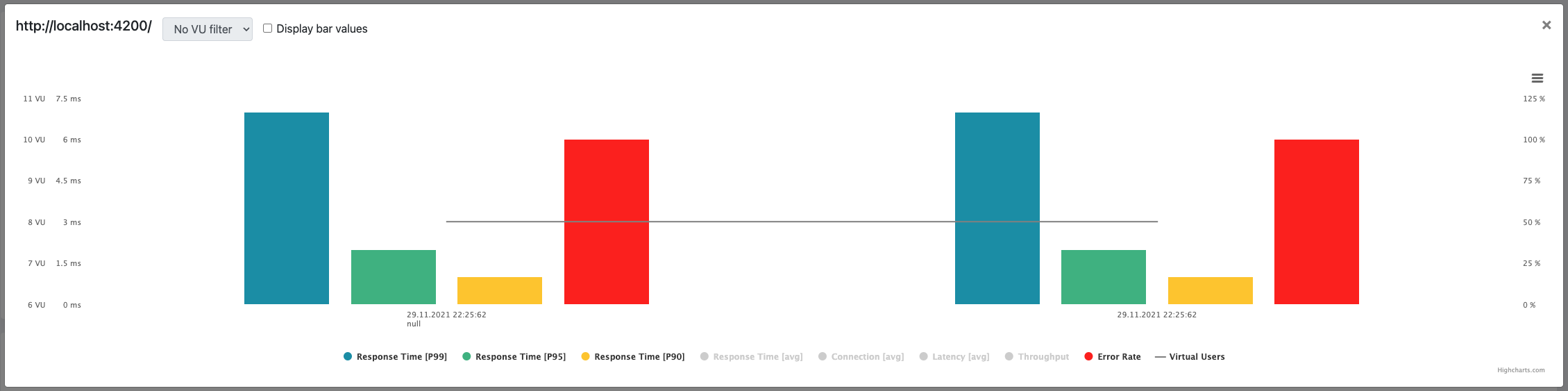

In recent release of JtlReporter the support for measuring the APDEX score was added. You can get the APDEX score for you JMeter or Locust.io test reports from now on - either get started with JtlReporter or upgrade your instance to the latest version. The metric was made optional and can be turned on in the scenario setting, while both threshold values are adjustable according to your specification and requirements of your system.